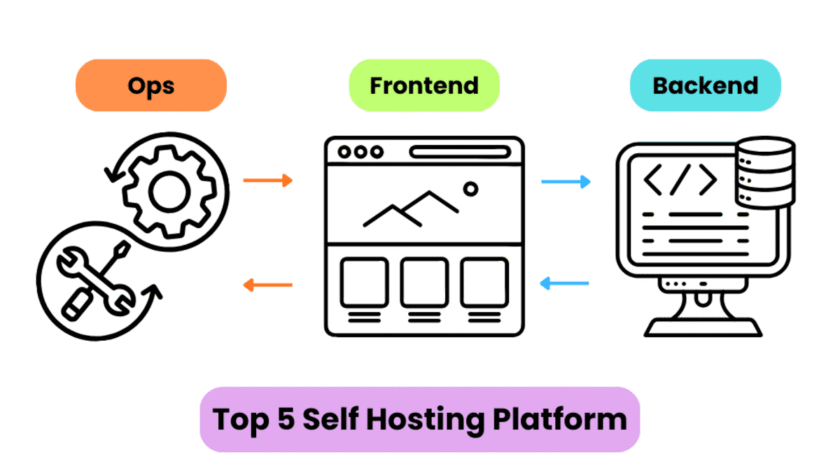

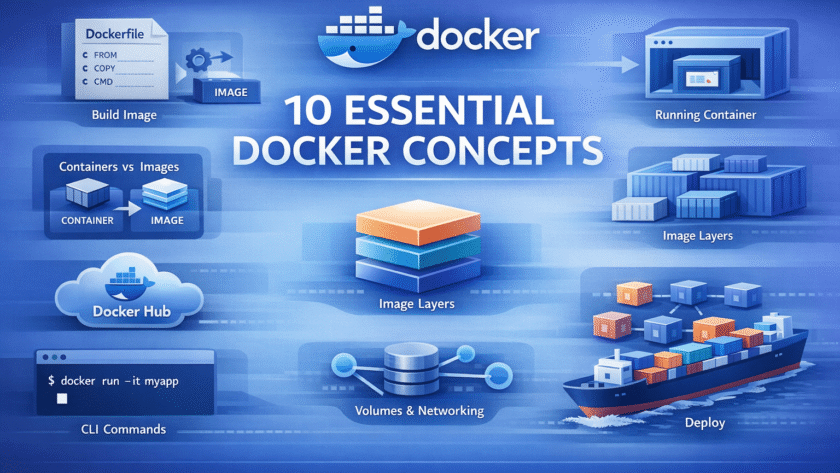

Image by Author

# Introduction

Imagine you are traveling and suddenly receive an urgent notification to update a pull request. You do not have your laptop with you, only your mobile phone. What do you do?

This is exactly where mobile code-editing apps become incredibly useful.

These apps allow you to collaborate,…