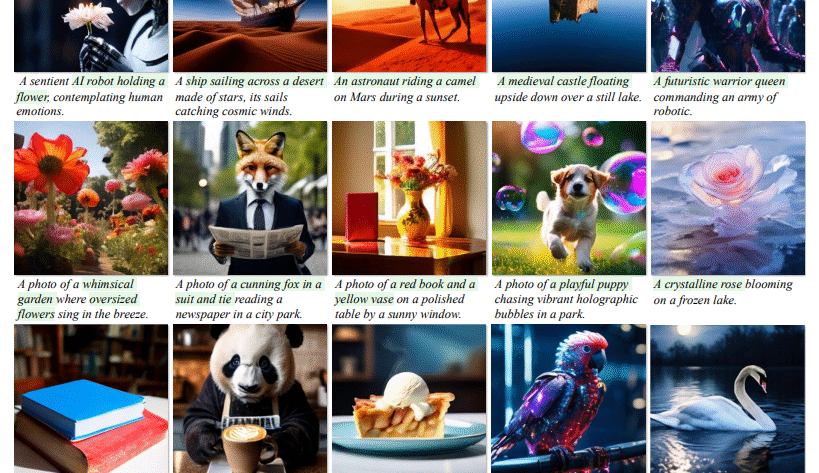

Multimodal modeling focuses on building systems to understand and generate content across visual and textual formats. These models are designed to interpret visual scenes and produce new images using natural language prompts. With growing interest in bridging vision and language, researchers are working toward integrating image recognition and image generation capabilities into a unified system.…