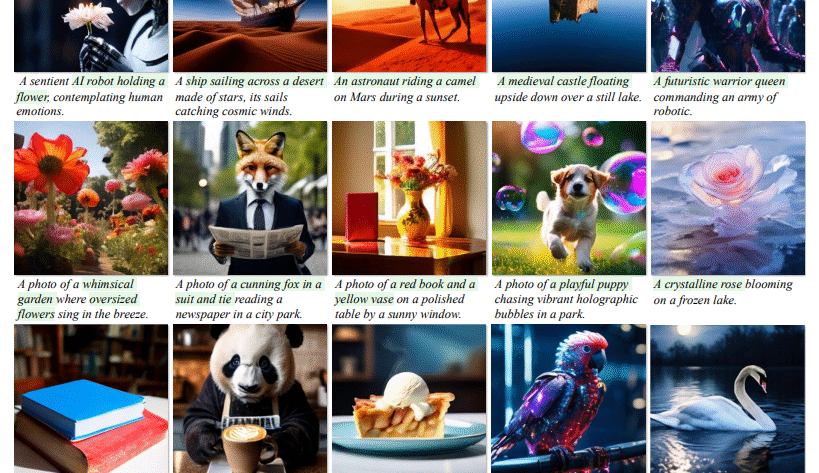

Why Multimodal Reasoning Matters for Vision-Language Tasks

Multimodal reasoning enables models to make informed decisions and answer questions by combining both visual and textual information. This type of reasoning plays a central role in interpreting charts, answering image-based questions, and understanding complex visual documents. The goal is to make machines capable of using vision as…